Want a high availability mysql cluster were you can access all the database nodes at the same time? As in Primary-Primary-Primary-Primary-etc setups but without the headache that comes with those?

Then this is the one for you.

In here I will show how to make, configure, and use a cluster that will automatically balance and share all the data within it. It does it automatically so you can write/change mysql data to one host and then read (or write) it from/to another host, and it won’t miss a beat.

Before you begin

Galera only can use InnoDB tables. If you have any MyISAM tables, you will need to convert them to InnoDB (MyISAM is not transactional). The only exception is the system mysql.user table. Do NOT convert this, as mysql needs it as a MyISAM. Just remember to only use CREATE USER commands and such to make/edit users. Only then does it get copied over to the other hosts.

Reads are very fast. Using sysbench in comparing a Galera cluster to a single instance database, for loads with mostly reads, the Galera cluster of 4 nodes was almost twice as fast as the single. It did 427028 vs 239680 reads in 60 secs, at 7321 vs 3994 per sec, averaging 1.91ms vs 3.50ms on a single request. That makes sense that a cluster would be faster at reading.

On the other hand, with mixed read and writes, the Galera cluster was somewhat slower for me at a total of 160246 vs 220134 read/writes in 60 secs, or 2670 vs 3668 per second, averaging 7ms for a query vs 5ms on the single. An optimized cluster and changing some my.cnf values may improve this, as I was using the default settings. It may be because it has to update the cluster with each write. I may look more into this at a later time.

The other thing to know is you must always have at least 2 nodes running at any one time. If it ever drops to 1, the last server will stop responding to requests due to a possible split brain issue.

Lastly, the Galera Cluster does automatically balance between the nodes. The node you connect to has the data from the others, and will push writes it receives to the other ones.

You can use other software to load balance it, however you will also need to split your reads from your writes, otherwise, depending on your data and uses, you may get ‘deadlock’ issues where two or more hosts are trying to edit the same data.

Installation

Here some basic installation instructions. These are just some suggestions. All you need is MariaDB and the Galera plugin.

apt-get install mariadb mariadb-server galera

dnf install mariadb mariadb-server galera

pacman -Sy mariadb perl-dbd-mysql lsof

It needs lsof for the cluster to work properly. galera must be installed from source, found below.

If your distro doesn’t provide galera, then go to the

Installing Galera from Source page, and follow the instructions on how to install it.

Setting it up

You may use your own current databases. But remember that you’ll need to convert any MyISAM databases, as Galera only supports InnoDB.

To make new databases, you usually use this command. Modify if needed.

mysql_install_db --user=mysql --basedir=/usr --datadir=/var/lib/mysql

Do NOT start up databases at this point! We will get to that point…

Configuration

These servers used an 10.20.30.101-104 network to communicate. Add the following to your my.cnf. For now I would not use any optimized settings or other [mysqld] [mysqld_safe] until you know what you’re doing.

The MariaDB server

[mysqld]

port= 3306

datadir=/var/lib/mysql

socket=/run/mysqld/mysqld.sock

user=mysql

binlog_format=ROW

bind-address=0.0.0.0

default_storage_engine=innodb

innodb_autoinc_lock_mode=2

innodb_flush_log_at_trx_commit=0

innodb_buffer_pool_size=122M

wsrep_on=ON

wsrep_provider=/usr/lib/libgalera_smm.so

wsrep_provider_options="gcache.size=300M; gcache.page_size=300M"

wsrep_cluster_name="awesome_cluster"

wsrep_node_name=host01

wsrep_node_address="10.20.30.101"

wsrep_cluster_address="gcomm://10.20.30.101,10.20.30.102,10.20.30.103,10.20.30.104"

wsrep_sst_method=rsync

[mysql_safe]

log-error=/var/log/mysqld.log

pid-file=/run/mysqld/mysqld.pid

You may have to update the above. Make sure you set wsrep_on=ON. And that wsrep_provider=/usr/lib/libgalera_smm.so is the right path to the libgalera_smm.so library.

Set wsrep_cluster_address to the list of your servers ip addresses composing your cluster. You may want to customize each so it doesn’t have it’s own address in the list, but I found it would just ignore it anyways.

On each host set the wsrep_node_name and wsrep_node_address values to the current host’s name and address.

Be aware that a Galera Mariadb cluster can use a ton of ram, and you may not be able to predict it. So set wsrep_provider_options="gcache.size=300M; gcache.page_size=300M" to a high enough value but not too high value.

Firewall

Open up your firewall between your nodes for tcp ports 3306, 4567, 4568, and 4444; and udp port 4567.

Here is an example for iptables/ip6tables rules to open up the ports on interface ‘internal’.

# For your mysql connection

-A INPUT -i internal -m tcp -p tcp --dport 3306 -j ACCEPT

# Mysql cluster

-A INPUT -i internal -p tcp -m tcp --dport 4567 -j ACCEPT

-A INPUT -i internal -p tcp -m tcp --dport 4568 -j ACCEPT

-A INPUT -i internal -p tcp -m tcp --dport 4444 -j ACCEPT

-A INPUT -i internal -p udp -m udp --dport 4567 -j ACCEPT

Be sure to restart/reload your firewall. For example:

systemctl restart ip{,6}tables

# or: service iptables restart

Starting the database

Warning

Do not start them yet.

As noted before, do NOT just start those databases. We need to first start up an initial (Primary) database, to bootstrap the cluster. Then we’ll start the others and they will connect to it.

To bootstrap it, make sure you have initialized the databases and added the config and firewall rules above. Then run this on one of the servers.

[root@host01]: mysqld_bootstrap

or on other systems

[root@host01]: galera_new_cluster

It will just stay on the terminal. Don’t expect it to fork and give your terminal back. This will start the first node as a new cluster, in a mode where it’s ready for others to connect to it.

Now, you can start mysql on another host:

[root@host02]: systemctl start mysqld

# or: service mysqld start

Using journalctl -f on this second host you’ll should see it connected

WSREP: wsrep_load(): loading provider library '/usr/lib/libgalera_smm.so'

WSREP: wsrep_load(): Galera ... loaded successfully.

WSREP: Could not open state file for reading: '/var/lib/mysql//grastate.dat'

WSREP: Found saved state: 00000000-0000-0000-0000-000000000000:-1,

WSREP: GCache history reset: old(00000000-0000-0000-0000-000000000000:0)

-> new(00000000-0000-0000-0000-000000000000:-1)

...

WSREP: gcomm: connecting to group 'awesome_cluster'...

WSREP: (55144012, 'tcp://0.0.0.0:4567') connection established to ...

The “00000000-0000-0000-0000-000000000000” means it hasn’t synced yet. Once it syncs you’ll see that changed to something like “4f81e556-f01d-11e6-91fa-462bd707f4d5”.

However, if you get the following error, your firewall may still be blocking one or more of the 45xx or 46xx ports. You may want to test that you can connect via nmap or telnet. Remember 4567 is a udp port. I got it because on of the ports was not allowed.

[Warning] WSREP: Failed to prepare for incremental state transfer:

Local state UUID (00000000-0000-0000-0000-000000000000) does not

match group state UUID (4f81e556-f01d-11e6-91fa-462bd707f4d5): 1

(Operation not permitted)

You can check the status of the cluster inside of mysql too. On any host connect to the database.

[root@hostAny]: mysql -u root -p

And ask it for the cluster status at the MariaDB prompt.

SHOW STATUS LIKE 'wsrep_cluster_size';

That should show:

+--------------------+-------+

| Variable_name | Value |

+--------------------+-------+

| wsrep_cluster_size | 2 |

+--------------------+-------+

The number will be however many are connected. You’ll get 1 when you start the bootstrap, and then 2, 3 and so forth as you connect the others. You can also use SHOW STATUS LIKE 'wsrep%'; to show all the stats, options, and info.

With that your second host is now connected. It will sync whatever databases are on the first. Proceed to go on to the others, start up the services, and verify they have synced.

Once you are done, you can Ctrl+C the first one, and start it’s service properly. If everything is working right, it will rejoin the cluster without any issue.

Indeed, you can now stop any host or mysql instance. Just make sure you have at least 2 instances running to keep the cluster going.

Root password

If you haven’t done so, setup your root password. You only have to do this on one of the hosts. It syncs to the others. You may also want to run mysql_secure_installation to get rid of testing databases.

If you want to block network root access, that would be ok. The cluster doesn’t need (mysql) root access to sync. And I find I can just manage the one I’m on and the changes sync to the others. Unless of course you have an application that needs root access, and it’s not on one of the servers.

Trying it out

Ok, let’s see it in action. On one host, let’s make a database by connecting to the database on host01.

[root@host01]: mysql -u root -p

And creating the database at the MariaDB prompt.

CREATE DATABASE sandbox;

CREATE USER 'kid'@'%' IDENTIFIED BY 'PASSWORD';

GRANT ALL PRIVILEGES ON sandbox.* TO 'kid'@'%' WITH GRANT OPTION;

FLUSH PRIVILEGES;

Info

Must use ‘%’ instead of just localhost so it can reach it from the other hosts.

Then on another host02, verify that the database is there. Connect to the host02 database.

[root@host02]: mysql -u root -p

And display the databases.

show databases;

+--------------------+

| Database |

+--------------------+

| sandbox |

...

+--------------------+

Verify the user kid exists from host02:

[root@host02]: mysql -u root -p

SELECT User, Host FROM mysql.user WHERE Host <> 'localhost';

+----------+-----------+

| User | Host |

+----------+-----------+

| kid@% | % |

...

+----------+-----------+

Tip

On the next steps I’ll specify which host we’re running the sql commands on, using your current connection via mysql -u root -p from above.

Now let’s make a table on host02:

CREATE TABLE sandbox.toys ( id INT NOT NULL AUTO_INCREMENT, type VARCHAR(50), quant INT, color VARCHAR(25), PRIMARY KEY(id));

And add some data:

INSERT INTO sandbox.toys (type, quant, color) VALUES ("truck", 2, "yellow");

Now, go back to host01 and check it out:

SELECT * FROM sandbox.toys;

That should output:

+----+--------+-------+--------+

| id | type | quant | color |

+----+--------+-------+--------+

| 1 | truck | 2 | yellow |

+----+--------+-------+--------+

Let’s add a red car from host01:

INSERT INTO sandbox.toys (type, quant, color) VALUES ("car", 1, "red");

Go over to host03, connect to the database using mysql -u root -p, and check it out:

SELECT * FROM sandbox.toys;

That should output:

+----+--------+-------+--------+

| id | type | quant | color |

+----+--------+-------+--------+

| 1 | truck | 2 | yellow |

| 2 | car | 1 | red |

+----+--------+-------+--------+

There it is. Already synced and ready to go.

Connecting your App

To connect your app, have it connect to the 3306 port of a cluster node, or use a load balancer.

Load Balancing and Fail Over

While it’s possible to just split the requests between all servers, this could cause ‘deadlocks’ if the same data is updated by different requests at the same time.

This can be minimized by splitting read/writes, and sending the writes to one server. More on that to come.

Benchmarking

To benchmark, there’s lots of programs out there. I used sysbench. Here’s how I did it.

First, make a sbtest user and database. I set a password for it too.

[root@host02]: mysql -u root -p

And at the MariaDB prompt:

CREATE DATABASE sbtest;

CREATE USER 'sbtest'@'%' IDENTIFIED BY 'PASSWORD';

GRANT ALL PRIVILEGES ON sbtest.* TO 'sbtest'@'%' WITH GRANT OPTION;

FLUSH PRIVILEGES;

Remember, you only have to do this on once and it will sync to the whole cluster.

In this we will assume the host HOST is your load balancer or gateway to your cluster. Change it according to how you set up your cluster.

First, prepare the database:

sysbench --test=oltp --mysql-host=HOST --db-driver=mysql \

--mysql-port=3306 --mysql-db=sbtest --mysql-password=PASSWORD cleanup

sysbench --test=oltp --mysql-host=HOST --db-driver=mysql \

--mysql-port=3306 --mysql-db=sbtest --mysql-password=PASSWORD prepare

Then we can run the read/write test. This will run it for 60 seconds and show the results:

sysbench --test=oltp --mysql-host=HOST --db-driver=mysql \

--mysql-port=3306 --mysql-db=sbtest --mysql-password=PASSWORD \

--max-time=60 --max-requests=0 run

Now run the read-only one:

sysbench --test=oltp --mysql-host=HOST --db-driver=mysql \

--mysql-port=3306 --mysql-db=sbtest --mysql-password=PASSWORD \

--max-time=60 --max-requests=0 --oltp-read-only=on run

There are other options like --mysql-engine-trx=yes and --oltp-connect-delay=0 that you can try, but I’ll leave that to you.

Restarting Broken Cluster

I had my entire cluster go down. Trying to start them up, no server would take the lead, which is understandable. No one’s the leader, and none of them want to take it in case they break something. So, per the note in the logs, I went ahead and edited /var/lib/mysql/grastate.dat and set safe_to_bootstrap to 1.

cat /var/lib/mysql/grastate.dat

That should output something like:

# GALERA saved state

version: 2.1

uuid: xxxxxxx-xxx-xxxxx-xxxxxxx

seqno: -1

safe_to_bootstrap: 1

Of course I had a backup of all my data. Just in case. Never do this unless you have to.

Make sure all your mysql instances are down. Pick one as the Primary. I picked the one that I knew was the last one running before they went down.

Then run ‘galera_new_cluster’ on it. It started and just sat there. Looking at the logs:

Flow-control interval: [16, 16]

Restored state OPEN -> JOINED (433557)

WSREP: Member 0.0 (pod01) synced with group.

WSREP: Shifting JOINED -> SYNCED (TO: 433557)

WSREP: New cluster view: global state: 4f81e556-f01d-11e6-91fa-462bd707f4d5:433557, view# 1: Primary, number of nodes: 1, my index: 0, protocol version 3

...

Started MariaDB database server

It then forked from the terminal. I started up the other ones normally (systemctl start mariadb) and all of them showed up as synced and part of the cluster.

Member x synced with group

And that’s how I got it back up. Checked my data and it was all still there. Nothing lost but some time.

Lesson learned: It’s a cluster and as such needs at least 2 working nodes up and connected to be able to keep the running state.

Conclusion

Setting up a Galera Cluster isn’t very hard, and can present some great speed benefits as well as failover.

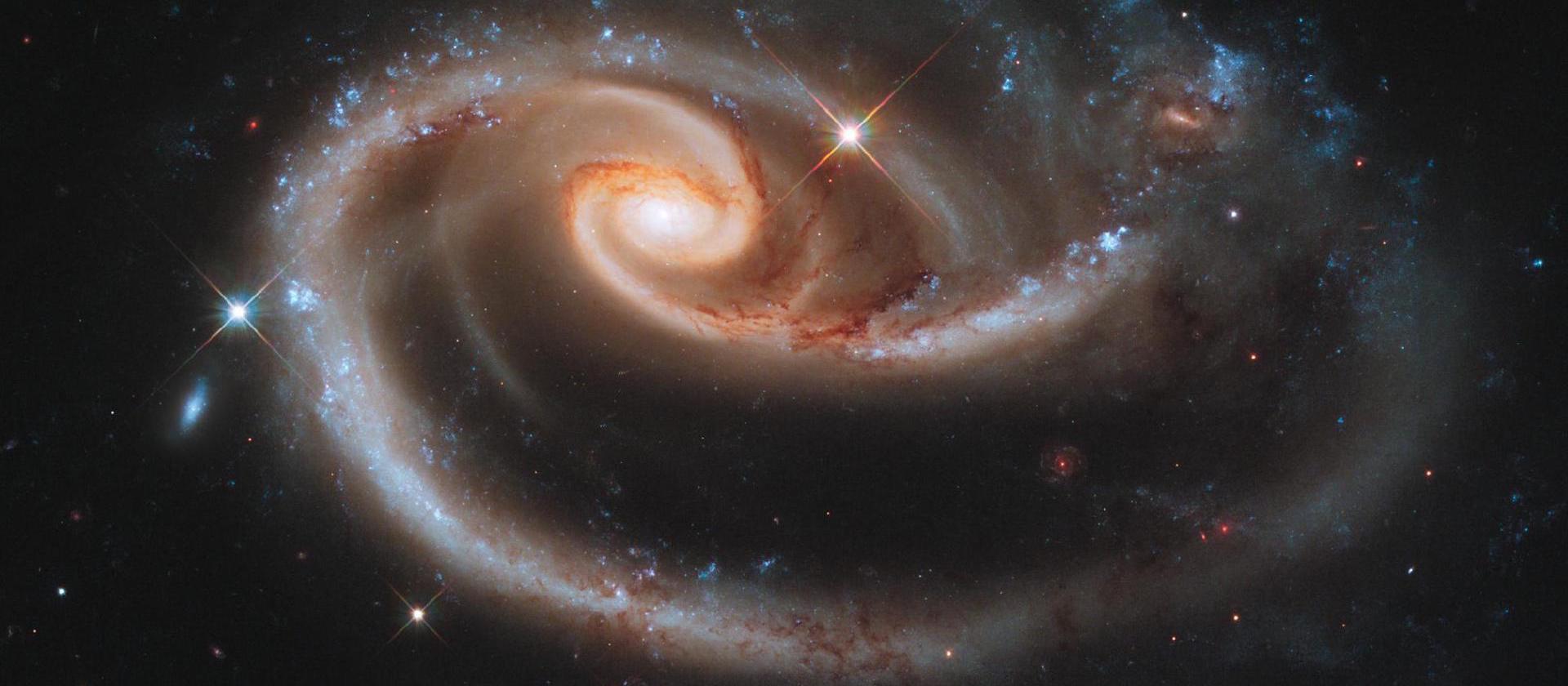

And as for the opening image, it’s from some interacting galaxies called “Arp 273”, posted at NASA in 2010.

Arp 273

From NASA’s page (Archived version, as NASA removed the page):

The larger of the spiral galaxies, known as UGC 1810, has a disk that is distorted into a rose-like shape by the gravitational tidal pull of the companion galaxy below it, known as UGC 1813. This image is a composite of Hubble Wide Field Camera 3 data taken on December 17, 2010, with three separate filters that allow a broad range of wavelengths covering the ultraviolet, blue, and red portions of the spectrum.